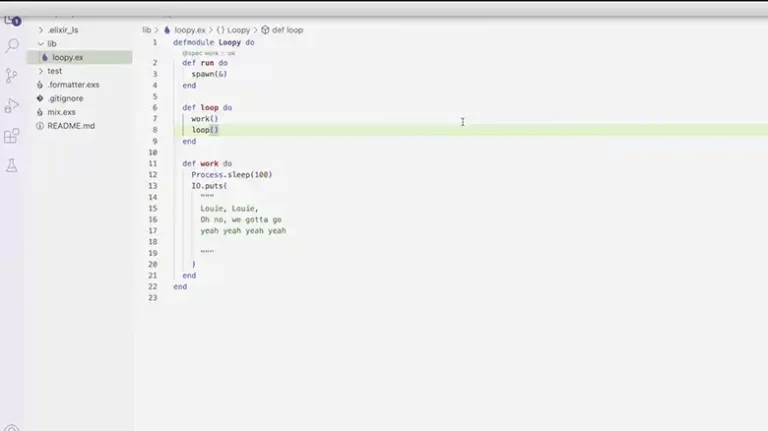

Can I get Erlang OTP behaviors in C Nodes?

Erlang, known for its exceptional capabilities in building concurrent, fault-tolerant, and distributed systems, relies on the Erlang/OTP behaviors as a key element in its toolkit. These behaviors, such as gen_server, gen_fsm, and gen_event, provide powerful abstractions that simplify the development of robust, distributed applications. But what if you need to integrate non-Erlang components into your…